Radiation Measurements without Posing the Inversion Problem

Tibro Papp*, Maxwell JA, Raics P, Balkay L and Gál J

DOI10.21767/2472-1948.100009

1Cambridge Scientific, 3A-47 Surrey Street East, Guelph, ON, N1H 3P6, Canada

2Institute for Nuclear Research, Hungarian Academy of Sciences, Bem ter 18/c Debrecen, H-4026, Hungary

3Institute of Experimental Physics, University of Debrecen, Bem ter 18/a Debrecen, H-4026, Hungary

4Institute of Nuclear Medicine, Medical and Health Science Center, University of Debrecen, Nagyerdei krt. 98, H-4032 Debrecen, Hungary

- Corresponding Author:

- Tibro Papp

Institute for Nuclear Research, Hungarian Academy of Sciences

Bem ter 18/c Debrecen, H-4026, Hungary

Tel: +1 226-780-5471

E-mail: tibpapp@netscape.net

Received date: February 25, 2016; Accepted date: March 10, 2016; Published date: March 17, 2016

Citation: Papp T, Maxwell JA, Raics P, et al. Radiation Measurements without Posing the Inversion Problem. J Sci Ind Metrol. 2016, 1:9. doi: 10.4172/2472-1948.100009

Abstract

Purpose:

Radiation measurement is an exponential analysis, which is an “illposed” problem, where the solution is not unique. Therefore, the accuracy of data points is critical. If the radiation detector is sensitive during the dead time, usually an extended dead time signal processing method is necessary and the calculation of the dead time and pile up rate invoke the inversion problem, which is difficult to solve, and only approximated. We have used two new approaches, where the inversion problem is not posed, and there is no need to assume the Poisson distribution or constant source strength. It goes beyond all previous techniques by handling the presence of discriminators. The preamplifier-signal processing chain has dead times and applies discriminators, as a consequence disturbs the randomness, and other distributions may also contribute. Although an undisturbed nuclear decay should be Poisson in nature, the measured spectrum usually does not have a Poisson distribution. The robustness of the two methods was investigated, focusing on the traceable derivation of statistical uncertainty. The two new methods were applied in gamma spectra measurements of 152Eu calibration sources, and nuclear half-life of 68Ga PET isotope, and compared with measurements using previous techniques.

Methods:

CSX (Cambridge Scientific) digital signal processors were used with five HPGe detectors in two operation modes in two measurement series. In quality assurance mode, the CSX processes all events, both the accepted and rejected ones, placing each event into one or more spectra based on the applied discriminators. Based on the accepted spectrum, the rejected spectra were analyzed to determine the single, double and triple events, the rate of unrelated, and noise events all to obtain the true input counts. The second and independent approach was a time interval histogram analysis for the measurement of the gamma ray intensities. In time interval histogram mode, the CSX creates an energy spectrum as well as an interval histogram of arrival times between successive events. This operation mode does not apply discriminators and has no dead time. The CSX signal processor was selected, as it offers a dead time free and less than 1% pile up rate up to about a million counts per second input rate, as well as quality assurance at the signal processing level.

Results:

The CSX processors employ a non-extended dead time approach and in quality assurance mode the true input rate is readily determined. As a consequence, the inversion problem was avoided. The uncertainties and uncertainty propagation are clearly justified. For the half-life measurement of 68Ga, the time interval histogram analysis gave six times smaller standard uncertainty than the square root of the counts. The inter-arrival time histogram was sensitive to non-deterministic behaviour of the detectors, and electronic disturbances, discrediting several measurement series. This additional ability is significant for quality measurements.

Conclusion:

Spectroscopy with HPGe detectors has lacked a method to credibly determine the uncertainties when discrimination is applied. In addition, a calibration procedure was required to establish the output rate versus input rate relations for each input rate preferably with a similar spectral distribution as the spectrum of interest. The preamplifier signal shape varied as a function of the input rate for each of the HPGe detectors studied. In such cases both the accepted and rejected event spectra are necessary for a proper evaluation. Accounting for all the events allows the determination of the uncertainties. The time interval histogram analysis is simple and straightforward, and offers an elegant way to determine the true input rate and uncertainty. The measurement can be applied over large input counting ranges, and does not require calibration.

Keywords

Exponential analysis; Inversion problem in radiation detection; Extended dead time; Non-extended dead time; Time interval histogram analysis; 68Ga; 152Eu; Compton background; Quality assurance; Digital signal processing; Pile up; Half-life; HPGe detectors

Introduction

In X-ray spectrometry with solid state detectors there are serious discrepancies and contradictions in the X-ray database and X-ray measurements [1-4]. It is instructional to look at the scatter of the data in the recent compilation of proton induced experimental L-shell cross section [5]. It is quite obvious that one of the main reasons for the scatter is that the signal-processors are not supplying the necessary information.

The signal from the detector preamplifier system has contributions from real events, noise triggered events, incompletely developed pulses, electronic disturbances. These additional processes have their own time distribution, not necessarily Poisson in nature. Discriminators are necessary to remove events from unrelated processes as well as to make a better quality spectrum for analysis. Discriminators are meant to discriminate against some events but the number of discriminated events depends on the knowledge and experience of the user / analyst, the constancy of noise and electric disturbances, input rates and events with other origins. The approach of the selected Cambridge Scientific CSX line of processors is to always process all events and create two spectra; one for the events passing all the discriminator criteria (we call it accepted or desirable), and one for the events failing one or more discriminator criteria (we call it rejected or non-desirable events). Evaluating both spectra allows determination of the true input rate [6] and the origin of many detector tailing features. These fully digital signal processors (DSP-s) have performed excellently in the field of source measurements.

We have had several requests for measurements in the field of gamma-ray spectroscopy with to see what advantages this methodology can offer. The signal coming from the preamplifier of X-ray and gamma ray detectors are very similar. In X-ray detectors the event generated signal is much smaller, which the DSP has handled excellently, achieving the best line shape and resolution. We were able to see signals down to 60 eV, and generating the lowest plateau, close to the theoretical minimum level suggested by basic electron transport processes [7]. Germanium detectors have a larger volume with all the associated issues, and more partially collected events due to Compton scattering which is much higher, and generally larger instances of detector imperfections and incomplete charge collection. We have made measurements on 152Eu radioactive sources in a Metrology Institute on their recently commissioned gamma detector, as well as separately in a Nuclear Research Center, with their own HPGe detector. Other measurements include a half-life measurement of 68Ga at a Medical School, with their three HPGe detectors. This we consider significant, as we were able to determine the true input rate using several approaches. The rejected event spectra allowed the separation of the rejected events to single and pile up events. The knowledge of the origin of the rejected spectra, and sorting it to unrelated, true, distorted and noisy events allows a better error estimate. A proper error estimate is imperative as the compilations tend to use an error weighted average approach, which tends to ignore most measurements with the exception of those with the least reported error which is often claimed to be less than one part per thousand and is based on the repeatability of a measurement as opposed to any fundamental error analysis and knowledge of the equipment. We also applied arrival time histogram analysis to confirm the input rates, and have a deeper quality control, where the arrival time distribution offers more sensitive quality assurance verification.

Quality Assurance Capable Signal Processing

In order to obtain analyzable spectra all signal processors or data collection systems have used one or more discriminators to reject unwanted events. However it is necessary to know the reason for the rejection. Previous signal processors have not supplied the necessary information about the spectra, and therefore the true spectra could not be reconstructed.

The difference in this signal processing approach is that it analyses all the events and sorts them to several spectra, based on whether they have passed or failed the discrimination criteria. This offers a quality assurance capability not previously available to the analyst. It is also very useful in training and education, as the reason of rejection can be seen visually, whether it was a noisy event, a pile up of two true events, a pile up with a noise, an imperfect signal or a Compton scattered event into the detector.

The CSX DSP-s offer several shaping choices, including truncated cusp, trapezoidal, rectangle and triangle. It counts the event recognition rate, the pre-amplifier reset rate, it allows proper handling of the so-called bucket effect, and it has a user-friendly set-up. All the measurements can be made in setup mode where in addition to the two spectra of accepted and rejected events; the spectra of events rejected by each individual discriminator alone are presented to the analyst. Because all detectors are aging and can change their performance with time and because the electronic noise and disturbances may very well be different at the analyst’s site than in the factory or even from the time of one measurement to another, the optimum manufacturer’s set-up is not necessarily valid in the analyst’s laboratory. If it is noisier, then more events will be rejected than is optimum, and in any case the analyst should establish the electronic efficiency at their measuring time and location. If the rejection criteria are set to be very weak, then the line shape, pileup suppression and c will not be optimum. The CSX signal processors also generate additional spectra for the events rejected by the specific discriminators. In the additional spectra the analyst immediately sees the effect of each discriminator on the measurement, and can choose an optimum value for the discriminator, via the user interface program. The cusp shaping is expected to provide the best resolution; therefore we have used that in these measurements.

The signal processor inspects the signal coming from the preamplifier and decides whether it has the desired quality or should be rejected. The rejected signal is usually counted and indicated in the dead time, and pile up counter with other systems. However, the rejected events will include noise events, noise piled up with noise or real events, single-event rejection peaks for those individual events that do not pass the event discrimination tests, multiple-event pileups as well as non X-ray or gamma ray (particle or high energy) events that generate a signal in the spectrum. Therefore, we have actually independent rates for the noise, the electronics disturbances, distorted events and real events. In order to determine the true event input rate we might have to determine up to four separate rates and the usual systems do not offer the necessary information. We have developed a procedure to account for these different factors and determine the true event input rate.

Our solution for the Basic Problem in Nuclear Counting is not Posing the Inversion Problem

The analysis of nuclear decay belongs to the general problem of exponential analysis [8]. It is solved in principle by the inverse Laplace transformation, yielding an equation, which belongs to the general class of Freehold integral equations of the first kind, which are known to be ill posed, or improperly posed. This means that the solution might not be unique, and may not depend continuously on the data. We have been taught for well-posed problems that the more data points are measured on the curve, the more accurately it can be ?tted. To the contrary, the excess to the necessary data points makes the inversion problem less stable for the ill-posed problem. Therefore good selection of the Exponential Sampling Method [9] is desirable.

Current techniques rely heavily on accurate measurements and representation of the system live time or dead time in order to correct the observed spectral (accepted) events for losses due to dead time, pile up rejection or any other discrimination that may be present [10,11]. These methods often have a heavy reliance on the assumptions, that the studied spectrum arises from a constant rate and it follows Poisson statistics. More modern approaches included such features as the so called zero dead time or loss free counting [12,13]. Pomme et.al. have reviewed and innovative live time clocks such as the Gedcke-Hale clock [13] have reviewed many of the errors associated with various systems including extended and non-extended dead time as well as pile up rejection at moderately high to high input rates relative to the system dead time [14].

There is no general formulation for the determination of the true input rate and its statistical uncertainty when discriminators are present, as it depends on the settings for the particular measurement. For dead time and pile up treatment there are formulations, however they deal only with true Poisson processes. Generally there is pile up with noise as well, which is not covered by the general approaches and is the equivalent of single event rejection or simply noise events alone which is equivalent to no real events lost but does introduce dead time.

For quality spectra discriminators are always used. First of all it is always necessary to use at least a threshold discriminator. This discriminator will assure that at least a majority of the noise spikes will not start the signal processing and will not be counted as events. However, they are still on the signal trail, and will deteriorate signal quality. If discriminators are used then the flow of time is interrupted and we will not have a Poisson process anymore. We will have no guidance on how to determine the uncertainty. An example of a frequently used discriminator is a pile up discriminator. The very word of discriminator means, that there are events discriminated against. Our view is that we must know them, to make a reliable measurement.

Two fundamental approaches are distinguished; non-extendable dead time and extendable dead time systems [15].They are also referred to as non-paralyzable and paralyzable models. The first one is used when the detector system is insensitive during the system dead time. The arrival of a second event during this period remains unnoticed, and the system will be active only after a constant dead time. It is imperative to have some protocol to determine the true input rate, as this is the one which may follow Poisson statistics. For the true input rate there are formulas to calculate the dead time and pile up rate. In addition only for the true input counts (N) may the √N be used for the uncertainty.

When the true input rate ρ is known, then for a single Poisson process the expected output rate R for a counter with a nonextendable dead time τn is

(1)

(1)

For extendable dead time τe

(2)

(2)

and for pile up with a pile up resolving time τp it is

(3)

(3)

The used CSX digital signal processors have several programs embedded. For the determination of the true input rate the following elements are used. Collecting oscilloscope traces to observe various aspects of the preamplifier signal. This allows the determination of the dead time periods associated with the reset time interval of resetting circuits, as in the case of pulsed feedback or transistor feedback preamplifiers. The data collection program, which collects all the events, sorting them to desirable, good, accepted event spectra, and also creating a spectra for the undesirable, bad or unaccepted events. The two spectra allow the analyst to sort out the rejected spectra counts to noise (nonevent), single, double etc., and all the possible combinations, to help determine the true input rate. The CSX data collection uses a non-extended dead time and is non-paralyzable however, as the input rate increases a larger fraction of the events will appear in the rejected spectrum as a consequence of pile up.

Because it is non-extended we can use equation (1) to determine the input rate with the output rate R and average dead time tau determined from information from both spectra. Alternatively the live time to real time fraction can be used to scale the counted real events to the true event input rate. The number of pile up events is mainly determined from the rejected spectrum. Thus we avoid having to solve the exponential inversion problem [16], which is solving eq. 2 and 3 or the more complicated dead time expressions.

The general procedure is as follows for an older CSX model in multi-discriminator counting mode:

• The pre-amplifier signal is examined using oscilloscope mode to determine the general characteristics of the detector and pre-amp. This would include such information as the signal rise time and in the case of reset pre-amps the average dead time associated with each reset.

• In multi-discriminator measuring mode the event dead time is a non-extended 800 ns independent of the actual event processing time. This is simply the time required by the processor to put the event into the on board spectrum memory. Newer models will significantly reduce this time.

• The number of resets and counts in each spectrum is used in conjunction with a small communication download dead time to determine the overall dead time and thus live time fraction for correction purposes.

• The accepted and rejected event spectra are examined with events assigned to noise alone, noise piled up with real events, single events, pile up of real events etc. to obtain a true event output count. The count is also increased due to events lost due to detector resets. It usually requires an event to trigger the reset which is not processed and early events after the reset may be processed into the underflow channel depending on relative size of the reset time and processing time.

• Events can have higher energies than the selected gain will place into the spectra. These are counted in the overflow channel. It also serves as a warning, if there are too many of them, that the gain needs to be reduced as one needs to determine what part of the overflow is assigned to single or piled up events.

One other method we employ is to create a rudimentary spectrum, all events above a threshold with no rejection, and a time interval histogram. Our implementation of this method is essentially dead time free, discriminator free (except a minimum level threshold discriminator), having less than 1% pile up to about a million counts per second. This provides a conveniently large input count range, which it is also practically pile up free.

These approaches offer a clearly defined and traceable method for counting the real events and derive its uncertainty, thus fulfilling the so called caveat emptor rule.

Half-life Measurements of 68Ga

68Ga decays by positron emission (89%) and electron capture, and 96.77% of the 68Ga decay ends in the ground state of 68Zn, with about a 68 minutes half-life. Half-life was determined by several authors, using NaI (Tl) scintillation crystals with or without coincidence and some using ionisation chambers, all of which required standardizations. The error analysis was restricted to statistical uncertainty only. The work of Iwata [17] is based on gamma ray measurement, adding a 133Ba additional radioactive source as a standard to the sample, and measuring the gamma-ray yield ratios as time elapses. They report a remarkable accuracy, 3.5 x 10-4. However, the error bar reflects the repeatability of the measurements, based on repeating the measurement 10 times and making an average, with its standard deviation. No standard or systematic errors are included. Here we have the problem of multi-exponentials, where the solution might not be unique [9,8-20]. Furthermore, their measurement in essence assumes that the intensity ratio of the 356 keV gamma lines and the 511 keV annihilation gamma lines is independent of the Ge detector response under any changing condition, and over orders of magnitude change in the input rate, and there is no additional source of error present. There have been observations that detector response may depend on the input rate and noise, the pile up recognition capability of the true events, unrelated events and noise and might well depend on the radiation energy [21-23].

In the evaluated databases the weighted average of the data is recommended [24]. Such a small error bar as that suggested by the Iwata measurement will dominate and essentially singlehandedly determine the weighted average. Be et al. [25] has recommended increasing the error bar arbitrarily to such a level that it will not contribute more than fifty per cent to the weighted average. The above examples clearly demonstrate the significance to obtain the true value and the true uncertainty, and traceable process, including all the events.

If pile up (or other type of) discriminators are used, and / or dead time is present the Poisson distribution is not valid for nuclear counting, although it can be a reasonable approximation in many cases. Such problems are magnified in low energy regimes, where the noise amplitude overlaps the low energy signal. Although it is uncommon to talk about these issues, there are serious inadequacies and contradictions that are readily observed.

In half-life determination the true input rate is the necessary piece of information that must be determined in a series of consecutive time intervals over widely varying rates. This can be presented as a function of elapsed time and displayed as a decaying activity plot. The half-life is determined based on the assumed exponential time dependence of the event rate.

In the case of usual spectrum collection, several discriminators are applied, which cause a pre-selection of the data, thus breaking the randomness and yielding a non-Poisson distribution of events. In this case the determination of the true input rate depends on corrections for the dead time; pile up rate, rejected event rate originating from badly shaped signals, possible unrelated events and noise events. Usually these rates are independent of one another and unknown, which often results in one or more necessary corrections being ignored, and / or an underestimation of the standard errors and systematic uncertainties.

Part of the original aim of these measurements was to explore the behaviour of the Ge detectors over a wide range of input rates and although we will not report on that here the experimental cycle was setup with this in mind. The experimental cycle takes advantage of two additional non-standard processing modes provided by the CSX processors. These are the oscilloscope trace modes, where the analog pre-amplifier signal is digitised and stored for an interval of time, and a time interval histogram mode where the inter-event interval times are binned and stored. For a Poisson process the time interval histogram should follow an exponential density function so that it forms a straight line on a semi-log plot of bin intensity versus bin number. This can be complicated by dead time and pile up, if they are present.

Experimental Method

The 68Ga radionuclide was obtained from a 68Ge / 68Ga radionuclide generator. The 68Ge compound was placed in the center of a geometrical arrangement, where three Ge detectors (100 cm3, 10 cm3 and 1 cm3) and four proportional counter detectors all viewed the same source. The signature events consist of a positron annihilation (511 keV gamma ray) or electron capture to some excited Zn state which decays on the ps scale to the ground state of 68Zn while producing a gamma ray. This provides a good homogeneous single Poisson rate system. We report one of the measurements with the 100 cm3 detector.

The source to detector distance was 40 cm, and a set of measurements were cycled through during the course of the overall measurement:

• A real time measurement using the CSX4 mode, where 4 discriminating criteria are employed: rise time, signal shape, noise and pile up. The processor was set to produce 6 spectra; accepted event spectrum, total rejected events spectrum by any of the discriminators, and 4 spectra for the events rejected uniquely by each discriminator alone. A sample spectrum is presented in Figure 1, to show the general details of the spectrum for two input rates. We can determine from the rejected spectrum the rejected single, double and triple pile up events counts.

Figure 1: Spectra created by typical spectral measurement with discriminators and dead time. The accepted event spectrum (continuous line, black in the online edition) is typical of what non CSX processors produce. The rejected event spectrum (dashed line, red in the online edition) by the discriminators, allows a more sophisticated analysis that accounts for noise, pile up, single event rejection of related and nonrelated events. The upper panel was done at an input rate of about 66 kcps while the lower panel shows a measurement at about 5.4 kcps. The difference in the rejected to accept spectrum is readily observed for the two different rates.

• A real time measurement using the CSX2 mode, where two discriminators are applied and two spectra are created one for the accepted events, and one for the rejected events. This is a fast mode measurement. The dead time of the processor for each processed event is about 800 ns.

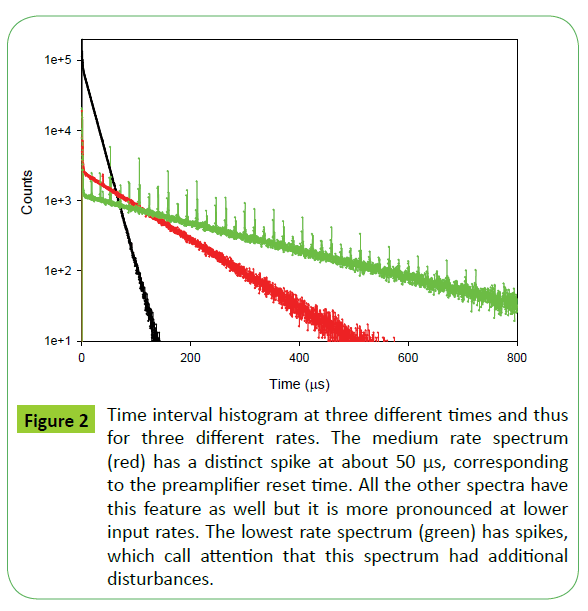

• A real time measurement with the time interval histogram mode. This mode is processor dead time free and uses no discrimination. The processor has more computing power than is needed for this analysis, therefore there is no signal processing dead time. The processor creates two spectra, one is the measured energy spectrum, and the second is the time interval histogram spectrum. As an example three spectra taken at different occasions in the time sequence are presented in Figure 2.

• An oscilloscope traces measurement with 100 ns sampling rate.

• An oscilloscope traces measurement with 1 microsecond sampling rate.

• An oscilloscope traces measurement with 10 microsecond sampling rate.

This cycle was repeated during the measurement beyond that time needed for the source to decay. In this way we see a full set of information about the details of detector performance over a wide input rate. The detector was contaminated by a mixture of calibration sources (60Co, 137Cs). This gives an opportunity to explore the capability when background is present. The lifetime of the calibration isotope mixture is several years, therefore the background can be considered constant for each spectrum taken, which was 109s.

We have seen what we expected; that the detector behaves differently under differing input rate conditions. The staircase like preamplifier signal’s slope is changing. Occasionally the low energy events, originating from electronic disturbances, have excessive counts in the rejected spectrum. This was not seen coincidentally with the other detectors, but all three had independent odd short term behaviours occasionally. Since we had six detectors monitoring the source, we had expected that any change in the background, or cosmic ray radiations would show up at the same time in each detector. In some of the spectra the numbers of preamplifier resets were not following the expected rate. Although the preamplifier oscilloscope traces looked normal and the accepted and individually rejected spectra had not shown any change in size and shape. In other spectra the underflow rejected events; who’s calculated energy was less than zero or the rejected low energy counts indicates that the preamplifier signals were changing on a few seconds timescale.

Pursuing the origin of the spikes in the time interval histogram (Figure 2), we have realised that the preamplifiers by design have a spectral dependent transmission function. From other aspects the preamplifier design offers several advantages for stable input rate applications. The spectral dependence at the signal processor level had been a common knowledge since 1977 [22], but its extension to the preamplifier and the strong dependence were quite a surprise.

Figure 2: Time interval histogram at three different times and thus for three different rates. The medium rate spectrum (red) has a distinct spike at about 50 μs, corresponding to the preamplifier reset time. All the other spectra have this feature as well but it is more pronounced at lower input rates. The lowest rate spectrum (green) has spikes, which call attention that this spectrum had additional disturbances.

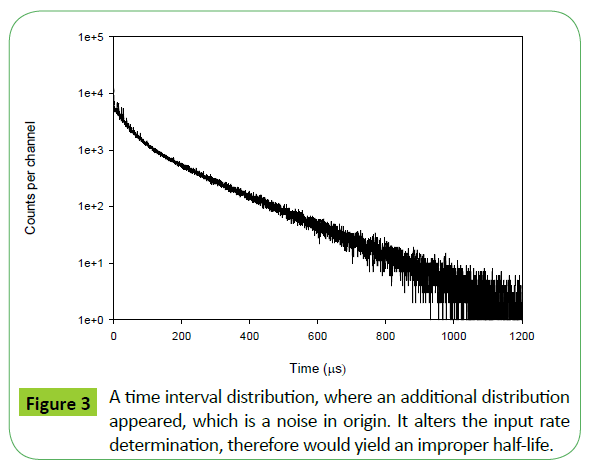

From several runs, we have selected only those for the evaluation, in which the spectra from all the different inspection aspects seemed to be flawless. In addition, the time interval histogram is a helpful indicator as a quality control. In several runs the time interval histogram showed signals of other sources or origin. An example is shown in Figure 3, where an additional contribution appeared, probably from noise in origin.

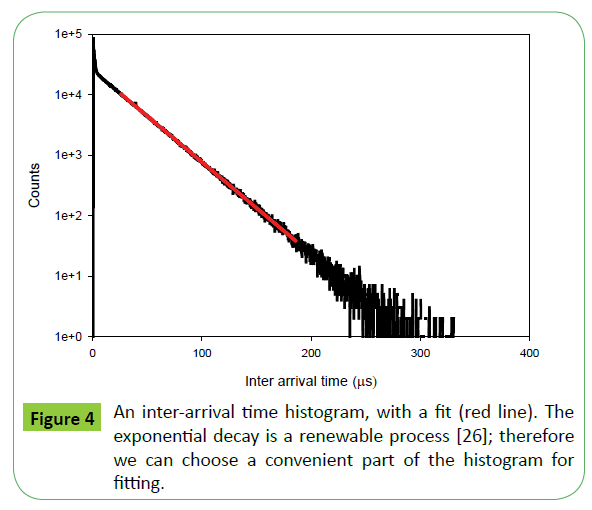

If a “clean” time interval histogram can be readily obtained, and it has an exponential form, advantage can be taken to obtain the true input rate from the slope of the semi-log plot of intensity versus time for the interval histogram. Simply speaking the slope of the line from the time interval histogram is used to determine the overall input rate for a given measurement, Figure 4. For a series of measurements the input rate can be plotted versus the real time elapsed from the beginning of the measurement series to obtain the decay curve. If the decay curve shows a simple exponential form, as it does in this case, then one can readily obtain the decay rate and thus the half-life.

Figure 4: An inter-arrival time histogram, with a fit (red line). The exponential decay is a renewable process [26]; therefore we can choose a convenient part of the histogram for fitting.

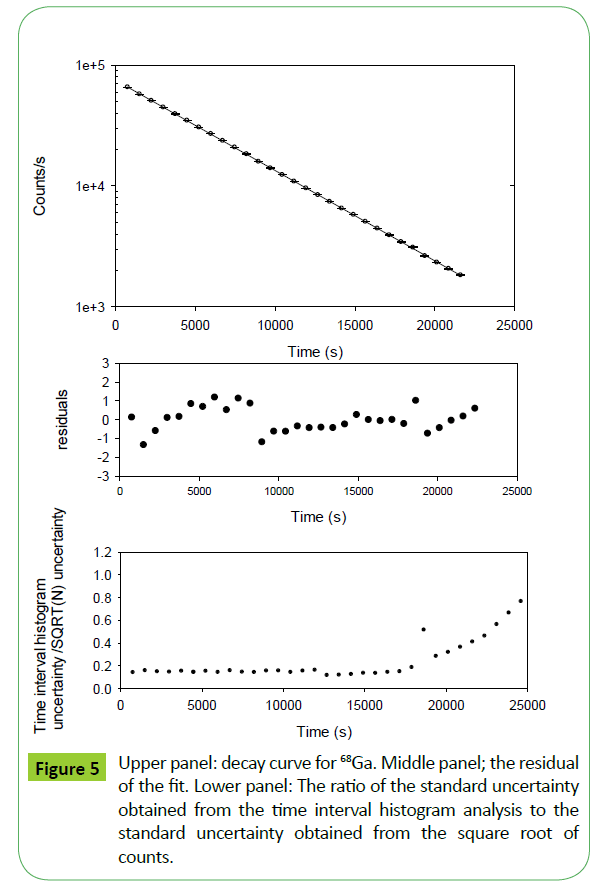

For us it was a new understanding, that the true input counts uncertainty can be determined with a significantly better accuracy from the time interval histogram analysis, than one would obtain from a simple square root of counts. Fitting the time interval histogram, we obtain the standard uncertainty from the fit. The ratio of this uncertainty to the square root of the counts is presented in the lower panel of Figure 5. Its value is about 1 / 6 up to the point where the background radiation is dominating the count rate. It also gives additional information about the data range which is justified to include in the decay constant calculation. The upper panel shows the decay curve. There are several types of losses. For example there is the so called bucket effect, which can be described as when one fills a bucket by means of several sizes of smaller containers, the last container will overflow the bucket and information on its volume is lost. In this case it means that the last event, which triggers the reset of the preamplifier, will be lost. In addition there is the preamplifier dead time associated with the reset. These are all losses that must be accounted for. This signal processor has an option to set the upper limit for consideration of the preamplifier signal level. When this option is employed the spectrum will not be skewed, as the small pulse events and large pulse events are equally credited, but the dead time would increase. After the reset the first events arriving immediately after the preamplifier returned to the operating range are usually lost, as the signal baseline is not proper after the reset. This represents another lost event which in the case of the CSX is included in the rejected spectrum. This lost event could also be a pile up event, which would mean two or more lost events. This can be determined from the rejected spectrum where single event rejection is usually readily distinguishable from pile up. It is also important to note, when the gain is set in a way that the interesting part of the spectrum spans most of the recorded spectrum, that any larger energy events, those outside the spectrum range that are also going through the preamplifier are accounted for in the last channel as overflow events. All of these factors will have varying importance in calculating the necessary corrections.

When the preamplifier signal slope is changing as the input rate changes, the discrimination criteria should be set generously so as not to discriminate more at one rate as opposed to another. If this were not the case the pile up recognition might not be the same at the different rates. Without viewing the rejected spectra we could not be sure that we have found all the necessary corrections, and certainly would miscalculate the associated uncertainties. It seems to be that the higher rate gives a more stable operation regime for this particular preamplifier.

In the case of the time interval histogram with rudimentary spectrum the rudimentary spectrum acts as both a counter and a cross check on the types of signals that are being detected. It will indicate if the amount of noise recognized is changing, or the ratios of other spectral components are varying with input rate and even the fraction of pile up events if the gain is chosen appropriately. All of these features can be used to validate or invalidate the time interval histogram. One can analyze the rudimentary spectrum to obtain the true input rate taking into account the dead time associated with the preamplifier resets and the lost counts that would trigger each reset or in the case where the time interval histogram displays Poisson behaviour the log of counts versus time interval can be fit by a straight line (Figure 4). The fit parameters will have their standard deviation, and error propagation method will give the uncertainty of the counts. The reset dead time can be ignored saving only those time intervals that occur between resets and since this is a renewal process no correction for the lost count or dead time is required.

Quality Assured Measurement on 152Eu

The gamma line intensities and their uncertainties for calibration standards are very basic information in nuclear spectroscopy. We have selected 152Eu to investigate how the different signal processing approaches effect the line intensity measurements, and if there any possible hidden systematic effects, maybe even errors [27]. We have made comparative measurements of the spectra with HPGe detectors in two institutes; in a Metrology Institute and in a Nuclear Research Center. In both cases we have made measurements with their standard system, and with the CSX4 digital signal processor. In the Metrology Institute their standard system used a pulsed feedback preamplifier, having a staircase like preamplifier signal, with associated digital signal processor electronics. The first measurement was made in parallel by two processing systems by splitting the preamplifier signal, and feeding it to the HPGe detector’s own processor and the CSX4 processor simultaneously.

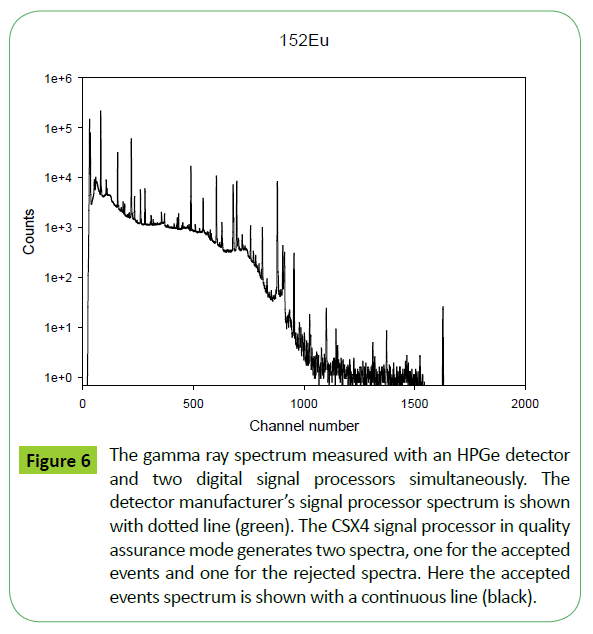

The spectra obtained by the two signal processors are presented in Figure 6. The spectrum of the detector manufacturer’s signal processor is presented with a dotted (green in the online version) line, while the black continuous line is the CSX4 signal processor’s accepted spectrum. The two spectra are scaled to overlap. The HPGe detector’s own processor reported a 30% dead time, and counted about 30% less counts. The CSX4 processor had a dead time of about 0.1%. At first sight there is a significant difference, above channel 1000. Certainly, the CSX4 spectrum looks pretty good, and it could be speculated, that the excess background of the green line might relate to continuous pile up, which was not recognized by the processor. The CSX4 signal processor has multilevel pile up recognition [21] therefore it could eliminate this feature if it was pile up. In Figure 7 we present the CSX processor accepted and rejected spectra. There is a spike at the end of the spectra, which are the overflow channels. The overflow channel stores the number of events having energy higher than the selected energy range. It is important to know, as it goes through the electronic system, having associated dead time, and also contributes to the bucket effect.

Figure 6: The gamma ray spectrum measured with an HPGe detector and two digital signal processors simultaneously. The detector manufacturer’s signal processor spectrum is shown with dotted line (green). The CSX4 signal processor in quality assurance mode generates two spectra, one for the accepted events and one for the rejected spectra. Here the accepted events spectrum is shown with a continuous line (black).

There is a peak at channel around 1600, and an associated Compton plateau visible between 1000 and 1500 channels in the rejected events spectrum. We assigned it as a lead gamma line, and its Compton scattered parts, probably from the lead shielding. The ability of the CSX4 to remove this structure from the accepted spectrum allows one to see lower intensity lines in the spectrum, as is clearly visible in the 1000 to 1500 channel range of the accepted event spectrum.

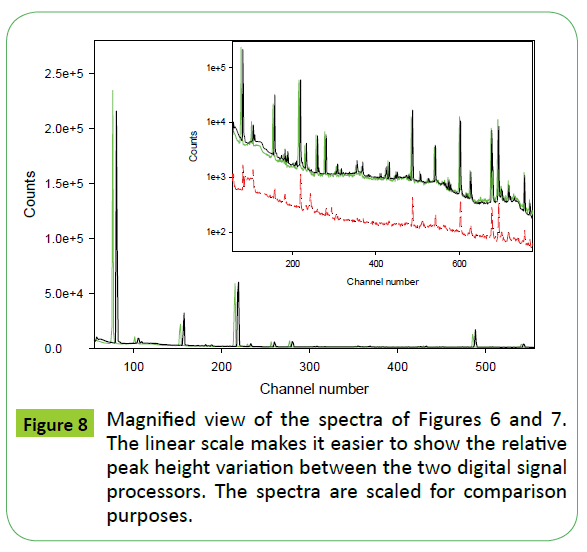

In Figure 8 we show the comparison of the two spectra on a linear scale. The surprising finding is the discrepancy of the relative line intensities between the two signal processors. Since the CSX signal processor is processing all events, we can see what fractions of the peaks were rejected. The rejection is too small in amplitude to explain the discrepancies. There isn’t even a trend in the discrepancies. The importance of having available all the events as in the CSX case are clearly demonstrated, and offer a more robust determination of the peak intensities.

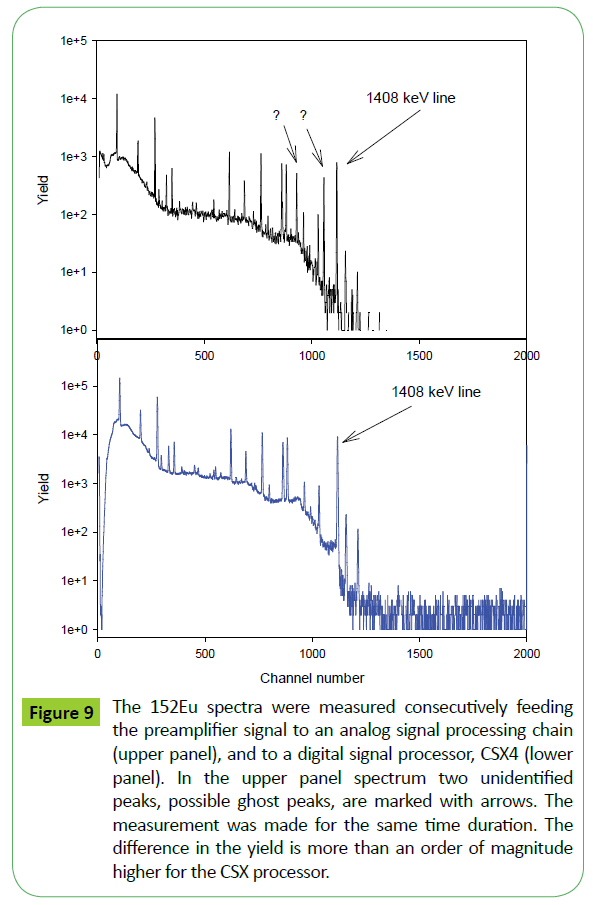

In the Nuclear Research Center, their regular system is a good quality HPGe detector, with continuous reset preamplifier and an analog nuclear electronic chain with a built in pole zero compensator and pile up rejecter. The preamplifier has a decay time constant of 100 microseconds. We made consecutive measurements feeding the preamplifier signal to the two systems. The two spectra are presented in Figure 9. The upper panel was made with the analog nuclear electronics, and the lower panel with the CSX4 digital processor. Comparing the two spectra, the following observations can be made. The CSX4 signal processor counted about 15 times more events while still providing a little better resolution. The analog spectrum has two extra peaks, both with proper line shapes, which could therefore be misidentified as valid peaks. They are marked with arrows. Since the 2nd measurement was made immediately after changing only the BNC cable connection from the analog to the digital system, the origin of the extra peaks is almost certainly an artifact of the processing electronics. We can be certain of this as they do not appear in either the accepted or rejected spectra in the measurements with the CSX processor.

Figure 9: The 152Eu spectra were measured consecutively feeding the preamplifier signal to an analog signal processing chain (upper panel), and to a digital signal processor, CSX4 (lower panel). In the upper panel spectrum two unidentified peaks, possible ghost peaks, are marked with arrows. The measurement was made for the same time duration. The difference in the yield is more than an order of magnitude higher for the CSX processor.

If we analyze the rejected spectra, we observe that some fractions of the peaks are rejected. The rejected spectrum has major contributions from the tail part of each line and that has the advantage of improving the line shape in the accepted event spectrum, while keeping an accurate account of the total events for each peak by storing the tail events in the rejected spectrum. The benefit is we can have both the good part for analysis and the rejected events to compensate for lost counts.

Looking into the spectra of the analog system, there are puzzling aspects of the line shape. In the low energy side of the spectrum the peaks have a good line shape. However in the second half of the spectrum at the high-energy side the peak shape is distorted. These are standard clean relatively simple spectra. Having a rejected spectrum available, a ghost peak in the accepted spectrum would present itself as a ghost deficit in the rejected spectrum, as an event is either in the accepted or in the rejected spectra. In such measurements a system is necessary that not only guarantees quality but whose performance is always easily verifiable. The CSX systems offer such verifiable quality assurance. Further examples are presented in ref [28].

The traceability for counting the real events and its uncertainty is similar as was presented for Si, Ge and CZT detectors [6,7,21] Counts in both the accepted and rejected spectra need to be identified as noise, or events corresponding to one or more real events, which can be a peak, and its low energy tailing or pile up. The accepted event spectrum is convoluted with a fitted pile up rate to obtain the unrecognized peak and continuum pile up representing two or more events. The rejected spectrum is then inspected and for this particular set of measurements they were always events from improper or distorted signals, mainly tail area, which are counted as single events. There can be unrelated events to the gamma ray spectrum, like other radiation, coming from the source or the background and actually counted by the detector. They contribute to the dead time but it can be decided whether or not to include these events in the real event total. For the properly summed up events we assign a square root of input count uncertainty. This is then corrected for the losses and dead time listed in section 3. We do not at this time assign uncertainty for the preamplifier reset related losses. The event dead time is known with good accuracy, determined only by the clock accuracy, driving the processor.

The difference between the current approach and the previous one is that the true input rate can be determined even when discriminators are used. The use of discriminators is necessary for high quality spectra, but as opposed to other methods, here the effect of each discriminator can be visualized by the rejected events spectra of each discriminator, as well as the rejected spectrum by all the discriminators. The origin of rejected spectrum’s events can be identified and an improved design of the measurement might eliminate it. This is an excellent tool for education, and for improving the measurement quality.

Conclusion

Use of the fully digital CSX processors allowed us to present two complementary methods for solution of the exponential measurement problem for determining the true input rate. True input rate can be determined even in the presence of discriminators as all events are processed and stored with the rejected spectrum allowing the analyst to separate events into various categories, noise, noise piled up with real events, real single events and piled up real events allowing an accurate accounting of real events. This has the additional advantage of increasing the live time to include event processing time. Thus the CSX processor can operate over a wide range of input rates without incurring large fractional dead time even with reasonable peak processing times. With the CSX’s loss free counting methodology it is not necessary to assume that the detected gamma rays have a constant strength or follow a Poisson distribution, while both of these assumptions are used in the currently applied techniques.

Comparative measurements were made between standard nuclear electronics and the CSX signal processor. The CSX offers novel approaches based on the recording of all events and different measurement modes that are available with its built in firmware. The accepted event spectrum provides a clean well discriminated spectrum for determination of peak areas while the rejected spectrum provides all the additional information required to determine both the true input rate as well as an energy dependent electronic efficiency for peak area correction.

Other measurement modes embedded in the CSX software also provide useful information to the analyst. For instance, the oscilloscope trace mode, which digitizes a time interval of the analog pre-amplifier signal, can be used to provide information about pre-amp dead times and signal condition. Another embedded mode of operation is the dead time free event time interval histogram along with rudimentary non-discriminated spectrum.

The evaluation of the interval histogram for input rate is made simple by use of the fact that the arrival of pulses is a renewal process [15,26]. It requires only fitting an exponential distribution, instead of a full spectrum. The error propagation is very simple, and fully traceable. The large throughput rate capability, pile up and dead time free operation allows one to measure spectra collected over a wide range of input rates which is ideal for halflife measurements. The arrival time interval method can give about an order of magnitude smaller statistical uncertainty.

The CSX DSP takes the “black box” out of spectrum formation. With its rejected event spectrum, time interval histogram and oscilloscope trace modes of operation it offers insight into detector operation and spectrum formation, which could be included in a study curriculum on the subject. The students could interactively determine spectrum quality and observe pile up evolution and its shape as well as the spectral noise contributions. By accounting for all observed events and dead times, the true input rate that is so critical in many measurements can be determined without any unnecessary assumptions.

Acknowledgement

We acknowledge support from the Marie Curie Grant within the 7th European Community Framework Programme, which supported some of the preparation work for this study. Some part of the work was supported by the ENIAC grant of “Central Nervous System Imaging” (Project number: 120209). The authors thank the laboratory of Prof JL Campbell of University of Guelph, Canada, K Taniguchi of Osaka Electro-Communication University, Japan; M.C. Lepy of the Laboratories National Henri Becquerel (BNM/LNHB) CEA / Saclay, France for support of some of the research presented in the paper.

References

- Papp T (2012)A critical analysis of the experimental L-shell Coster-Kronig and fluorescence yields data. X-Ray Spectrometry 41: 128-132.

- Papp T, Maxwell JA, Papp AT (2009)The necessity of maximum information utilization in X-ray analysis. X-Ray Spectrometry 3:210-215.

- Papp T, Maxwell JA, Papp A, Nejedly Z, Campbell JL (2004) On the role of the signal processing electronics in X-ray analytical measurements.NuclInst and Meth B 219-220.

- Papp T, Maxwell JA, Papp AT (2009) A maximum information utilization approach in X-ray fluorescence analysis.SpectrochimicaActa Part B 64: 61-770.

- Miranda J,Lapicki G (2014) Experimental cross sections for L-shell X-ray production and ionization by protons. Atomic Data and Nuclear Data Tables100: 651-780.

- Papp T, Maxwell JA (2010)A robust digital signal processor: Determining the true input rate. NuclInstr and Meth in Phys Res A 619:89-93.

- Papp T (2003)On the response function of solid state detectors, based on energetic electron transport processes. X-Ray Spectrometry 32: 458-469.

- Istratov A,Vyvenko OF (1999) Exponential analysis in physical phenomena. Review of Scientific Instruments 70: 1233.

- Ostrowsky N, Sornette D, Parker P, Pike ER (1981) Exponential Sampling Method.OptActa 28: 1059.

- International Commission on Radiation Units and Measurements(1994) Particle counting in radioactivity measurements ICRU Report 52.

- Müller JW (1973) Dead-time problems.NuclInstrum Methods 112: 47–57.

- Westphal GP (2008) Review of loss-free counting in nuclear spectroscopy. J RadioanalNuclChem 275: 677–685.

- Jenkins R, Gould RW, Gedcke D (1995) Quantitative X-ray Spectrometry.

- Pommé S, Fitzgerald R,Keightley J (2015) Uncertainty of nuclear counting, Metrologia 52: 3–17.

- Müller JW (1970) Counting statistics of a Poisson process with dead times. Rapport BIPM111.

- PommeS (1999) Time-interval distributions and counting statistics with a non-paralysable spectrometer. Nuclear Instruments and Methods in Physics Research A 437: 481-489.

- Iwata Y, M. Kawamoto M, Yoshizawa Y (1959) Half-Life of 68Ga.Int J ApplRadiatIsot 34: 1537.

- Lanczos C (1959) Applied Analysis Prentice-Hall. Englewood Cliffs 272: 72.

- Julius RS (1972)The sensitivity of exponentials and other curves to their parameters.ComputBiomed Res 5: 473-478.

- GrinvaldA,Steiberg IZ (1974)On the analysis of fluorescence decay kinetics by the method of least-squares. Anal Biochem 59: 583.

- Papp T, Papp AT, Maxwell JA (2005) Quality assurance challenges in x-ray emission based analyses, the advantage of digital signal processing. Analytical Sciences 21: 737-745.

- Statham PJ (1977) Pile-up rejection: Limitations and corrections for residual errors in energy-dispersive spectrometers. X-Ray Spectrometry 6: 94.

- Pomme S, Denecke B, Alzetta JP (1999) Influence of pileup rejection on nuclear counting, viewed from the time-domain perspective.NuclInstr and Meth in Phys Res A 426: 564.

- McCutchan EA (2012) Nuclear Data Sheets for A = 68. Nuclear Data Sheets 113: 1735.

- Bé MM, Chisté V, Dulieu C, Mougeot X, Chechev VP, et al. (2013)Monographie BIPM-5. Table of Radionuclides 7: 14-245.

- Cox DR (1962) Renewal theory 142.

- JCGM (2008) Evaluation of measurement data – Guide to the expression of uncertainty in measurement.

- Papp T, Maxwell JA, Gál J, Király B, Molnár J, et al. (2013) Quality assurance via digital signal processing.Instrumentation for digital nuclear spectroscopy 58-75.

Open Access Journals

- Aquaculture & Veterinary Science

- Chemistry & Chemical Sciences

- Clinical Sciences

- Engineering

- General Science

- Genetics & Molecular Biology

- Health Care & Nursing

- Immunology & Microbiology

- Materials Science

- Mathematics & Physics

- Medical Sciences

- Neurology & Psychiatry

- Oncology & Cancer Science

- Pharmaceutical Sciences